A team of researchers from Zhejiang University has introduced a new method aimed at improving federated learning, a machine learning framework that enables collaborative training of deep models across decentralized clients while preserving data privacy. Their study, titled “FedMcon: an adaptive aggregation method for federated learning via meta controller,” addresses the limitations of the traditional federated averaging algorithm (FedAvg), which struggles with heterogeneous client data distributions.

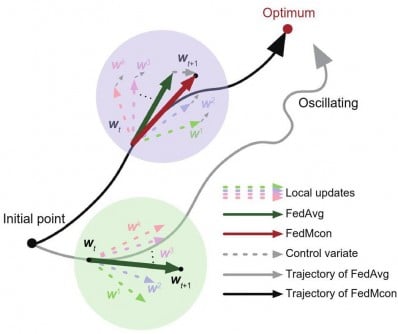

The conventional FedAvg method typically relies on weighted linear combinations for aggregating models from various clients. This approach often fails to accommodate the diverse data distributions and dynamics encountered in real-world scenarios, resulting in slow convergence rates and reduced generalization performance. To overcome these challenges, the researchers proposed a novel aggregation method called FedMcon, which operates within a meta-learning framework.

FedMcon introduces a learnable controller that is trained on a small proxy dataset. This controller serves as an aggregator, adaptively combining local models to create a more robust global model that aligns with the intended objectives. The results from their experiments indicate that FedMcon significantly enhances performance, particularly in cases of extremely non-independent and identically distributed (non-IID) data.

Performance Metrics and Experimental Results

The research team conducted extensive evaluations of FedMcon across three diverse datasets: MovieLens 1M, FEMNIST, and CIFAR-10. These assessments encompassed various federated learning scenarios, including cross-silo and cross-device settings, while varying parameters such as the levels of non-IID data, the number of local epochs, and the number of participating clients.

Notably, FedMcon demonstrated a communication speedup of up to 19 times in a single federated learning setting. The method consistently outperformed other leading federated learning techniques across several performance metrics, including Area Under the Curve (AUC), Hit Rate (HR), Normalized Discounted Cumulative Gain (NDCG), and top-1 accuracy. Moreover, the aggregation method exhibited superior convergence speed, making it a promising advancement in the field of federated learning.

The paper, co-authored by Tao Shen, Zexi Li, Ziyu Zhao, Didi Zhu, Zheqi Lv, Kun Kuang, Shengyu Zhang, Chao Wu, and Fei Wu, is available as open access. Researchers and practitioners interested in the details can find the complete study at this link: https://doi.org/10.1631/FITEE.2400530.

In summary, the introduction of FedMcon signifies a notable advancement in federated learning methodologies, addressing critical challenges and paving the way for more effective applications in environments where data privacy is paramount.