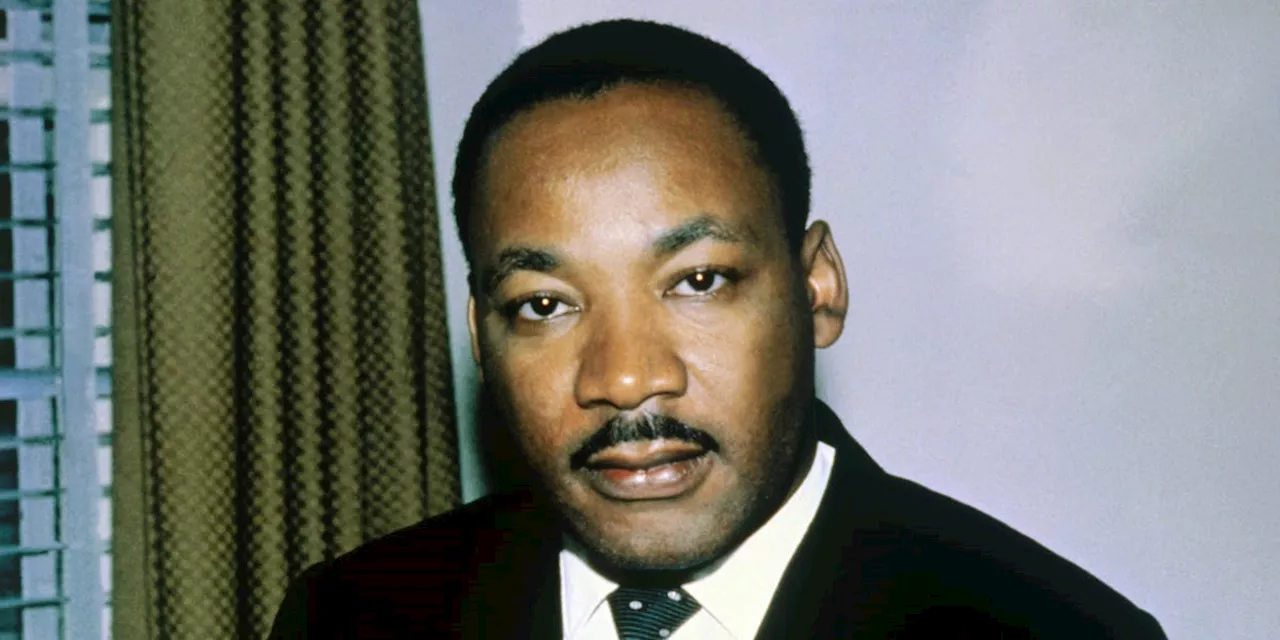

OpenAI has suspended the generation of deepfake videos featuring civil rights leader Martin Luther King Jr. on its Sora app following backlash from his estate concerning inappropriate representations. This decision comes after the estate expressed concerns about “disrespectful depictions” circulating online, prompting OpenAI to pause video generation involving Dr. King while it enhances its protective measures for historical figures.

In a joint statement released on October 26, 2023, OpenAI and the Estate of Martin Luther King, Jr., Inc. announced their collaboration to ensure responsible representation of historical icons. The statement noted, “At King, Inc.’s request, OpenAI has paused generations depicting Dr. King as it strengthens guardrails for historical figures.” OpenAI acknowledged the importance of free speech in depicting such figures, yet emphasized that families should have control over how their likeness is utilized.

The announcement follows a series of hyper-realistic deepfake videos that inaccurately portrayed King in various derogatory scenarios, including theft and reinforcing racial stereotypes. These videos gained traction on social media, alarming advocates for ethical representation.

Launched just three weeks prior, the Sora app allows users to create lifelike videos using artificial intelligence. However, its minimal restrictions have raised concerns among legal experts and public figures alike. Critics argue that the app’s rapid rollout lacked sufficient safeguards, leaving it open to potential misuse.

When users join Sora, they upload multiple angles of their faces and record their voices, allowing others to create personalized video “cameos.” Unfortunately, the system also permitted the generation of videos featuring public figures like Princess Diana and John F. Kennedy without their families’ consent.

Legal experts have criticized OpenAI’s delayed response to these concerns. Kristelia García, an intellectual property law professor at Georgetown Law, indicated that the company’s actions were reactive rather than proactive. She noted that the AI sector often prioritizes speed over ethical considerations, highlighting a pattern of seeking forgiveness rather than permission.

Some states, including California, offer heirs strong rights over a public figure’s likeness for a period extending up to 70 years posthumously. This legal framework adds complexity to the challenge of managing deepfake technologies.

In response to the backlash, OpenAI’s CEO Sam Altman announced that rights holders will soon need to opt in for the use of AI-generated likenesses, rather than being included by default. Nevertheless, many families have expressed dissatisfaction with this approach. Following the viral spread of fake videos of Robin Williams, his daughter, Zelda Williams, publicly urged, “Please, just stop sending me AI videos of my dad… it’s NOT what he’d want.” Similarly, Bernice King took to social media to plead for an end to AI-generated depictions of her father.

Hollywood studios and talent agencies have also voiced concerns. They argue that the Sora app’s release without necessary consent mirrors OpenAI’s previous practices with its ChatGPT product, which utilized copyrighted material before establishing licensing agreements. This precedent has already led to multiple copyright lawsuits, and the current controversy surrounding Sora could add to this legal turmoil.

OpenAI’s actions reflect a growing awareness of the ethical implications surrounding AI-generated content, particularly as it relates to the likenesses of historical figures. The company is now working to implement more stringent measures to prevent the misuse of such representations in the future.