A user has successfully integrated NotebookLM with a local Large Language Model (LLM), resulting in a significant boost in research productivity. This innovative approach combines the contextual accuracy of NotebookLM with the speed and privacy of a local LLM setup, fundamentally changing how complex projects are managed. The integration has allowed for enhanced organization, efficiency, and control in digital research workflows.

Bridging Creativity and Control

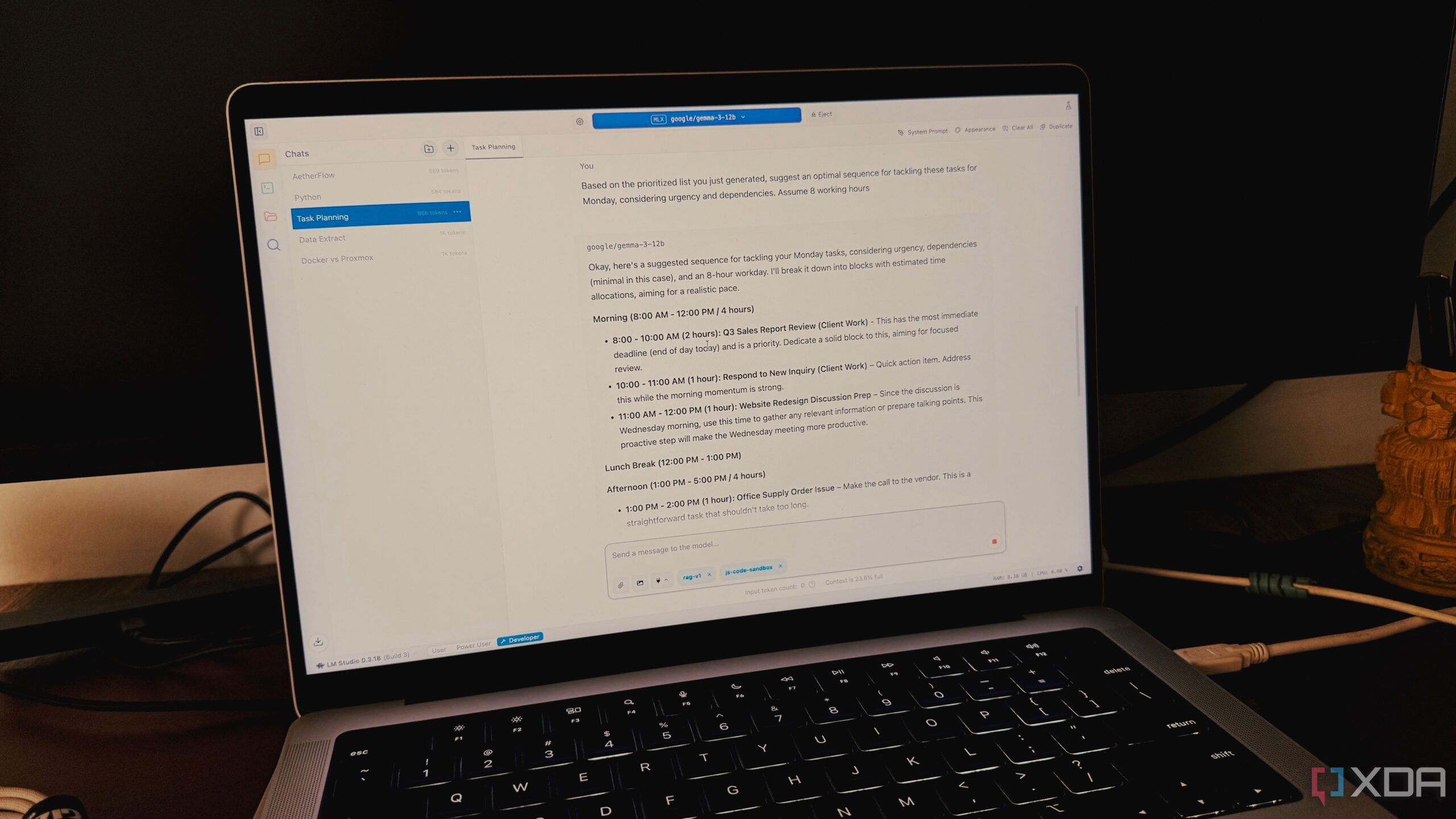

Digital research can often feel overwhelming, especially when trying to balance the need for deep context with the desire for creative control. The user, who has spent months grappling with this issue, discovered a solution by merging the strengths of both NotebookLM and a local LLM running in LM Studio. This hybrid method started as an experiment, but it quickly transformed into a major productivity enhancer.

NotebookLM excels at organizing research and generating insights based on user-uploaded documents like PDF files and blog posts. It allows users to ask complex questions and summarize material effectively. However, the challenge lies in finding relevant sources and drafting important notes efficiently. The local LLM setup addresses these issues by providing speed, privacy, and the flexibility to adjust model parameters without the constraints of API costs.

How the Integration Works

The integration process involves leveraging the local LLM for initial knowledge acquisition and organization before grounding that information with deeper sources in NotebookLM. For instance, when the user tackles a new subject like self-hosting applications via Docker, the local LLM generates a structured overview in seconds. This overview includes essential components, security practices, and networking fundamentals.

Once this structured overview is created, it is copied into a NotebookLM project. The user can then instruct NotebookLM to treat the overview as a source, allowing them to ask targeted questions and receive relevant answers quickly. This method not only enhances productivity but also creates a robust and private knowledge base that effectively combines the strengths of both platforms.

The user has noticed significant efficiency gains since implementing this workflow. For example, they can generate audio summaries of their research stack, which can be consumed on the go. Additionally, NotebookLM’s source-checking and citation features streamline the validation process, enabling users to quickly identify the origins of specific facts without manual cross-referencing.

This integration has fundamentally altered the user’s approach to research. What started as a quest for marginal improvements has evolved into a comprehensive strategy that transcends the limitations of traditional cloud-based or local-only workflows. The user emphasizes that for those serious about maximizing productivity while retaining control over their data, this pairing of a local LLM with NotebookLM is a groundbreaking framework for modern research environments.

For individuals looking to enhance their research capabilities, this innovative approach is worth exploring. The potential applications of using a local LLM alongside NotebookLM are vast, offering a new model for efficient and effective digital research.