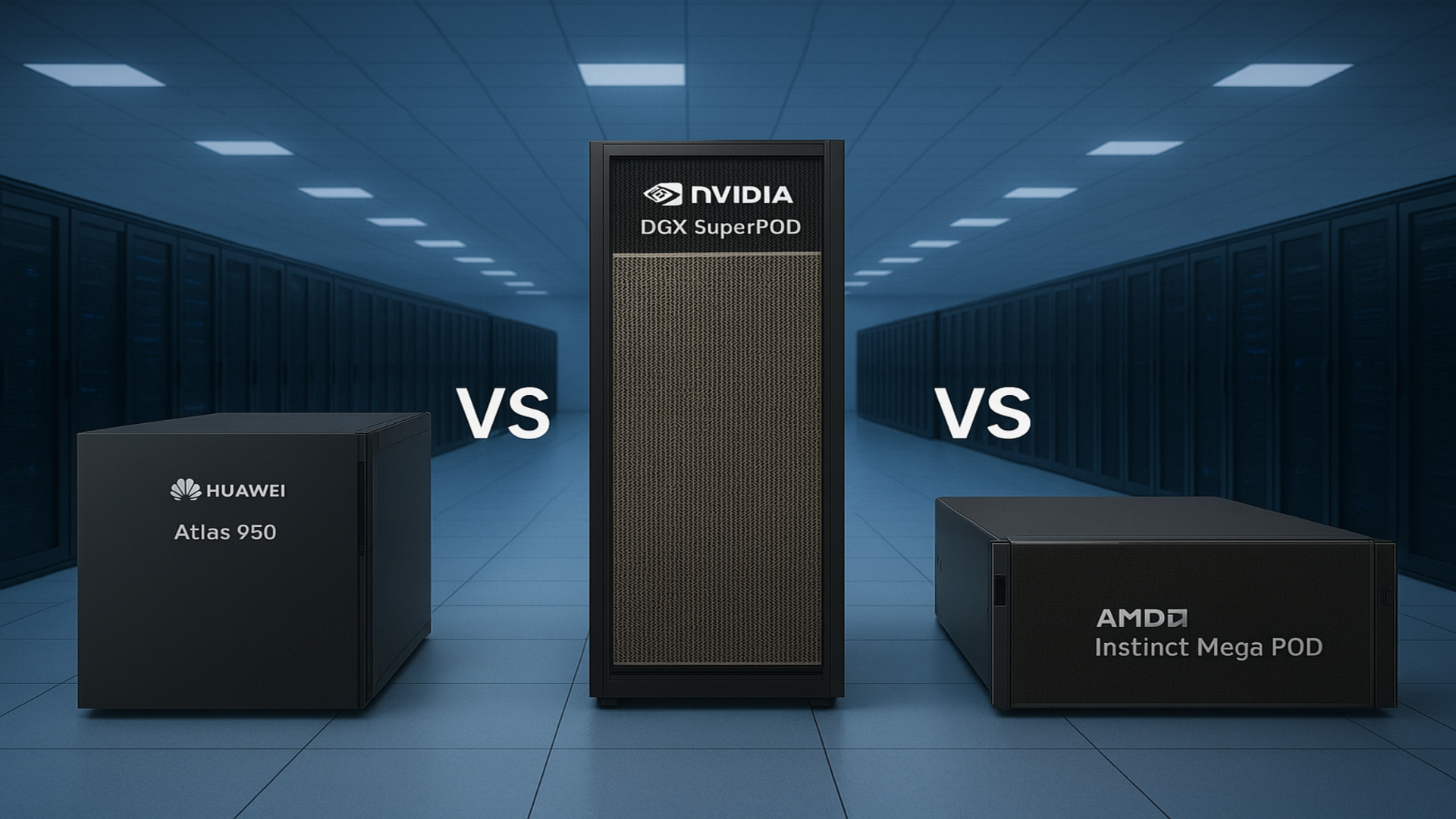

The competition among tech giants to dominate AI supercomputing is heating up, with major players like Huawei, Nvidia, and AMD unveiling their latest flagship systems. Each company aims to deliver high-performance computing solutions capable of handling next-generation AI workloads, including trillion-parameter models and data-intensive research. The recently announced Huawei Atlas 950 SuperPoD, Nvidia DGX SuperPOD, and the upcoming AMD Instinct MegaPod illustrate different strategies in achieving this goal.

These systems are designed to provide substantial compute power, memory, and bandwidth in a scalable package. They cater to various applications, such as generative AI, drug discovery, and autonomous systems. The Atlas 950 SuperPoD boasts an impressive configuration of 8,192 Ascend 950 NPUs, targeting a peak performance of 8 exaFLOPS in FP8 and 16 exaFLOPS in FP16. This configuration positions Huawei to excel in both AI training and inference at an extraordinary scale.

Performance Comparisons: Architecture and Specifications

Each system reflects its maker’s distinct philosophy. Huawei relies on its proprietary Ascend 950 chips and a custom interconnect called UnifiedBus 2.0, focusing on maximizing compute density. In contrast, Nvidia has refined its DGX line over the years, integrating GPUs, CPUs, networking, and storage into a cohesive environment. The DGX SuperPOD, built on DGX A100 nodes, features 20 nodes with a total of 160 A100 GPUs, optimized for mixed precision AI tasks.

While the AMD Instinct MegaPod remains in development, early details indicate it will include 256 MI500 GPUs and 64 Zen 7 “Verano” CPUs. Although its performance metrics are yet to be released, AMD aims to rival Nvidia’s efficiency and scalability through cutting-edge technologies such as PCIe Gen 6 and advanced networking ASICs.

Memory and interconnect speed are critical for these systems. Huawei claims that the Atlas 950 SuperPoD can support over a petabyte of memory and achieve a system bandwidth of 16.3 petabytes per second. In contrast, Nvidia’s DGX SuperPOD offers 52.5 terabytes of system memory and high-bandwidth GPU memory, with InfiniBand connections up to 200Gbps per node. AMD’s MegaPod promises to surpass current networking capabilities with Vulcano switch ASICs featuring a capacity of 102.4Tbps.

Physical Design and Market Availability

The physical architecture of each system varies significantly. Huawei’s design allows for expansion from a single SuperPoD to a SuperCluster comprising up to half a million Ascend chips, potentially requiring over a hundred cabinets across a thousand square meters. Nvidia’s DGX SuperPOD, designed for compactness, can be deployed without the need for extensive data hall space. Meanwhile, AMD’s MegaPod adopts a modular layout, featuring two racks of compute trays and one dedicated networking rack.

In terms of market availability, Nvidia’s DGX SuperPOD is already available. The Huawei Atlas 950 SuperPoD is expected to launch in the fourth quarter of 2026, while AMD’s MegaPod is slated for release in 2027.

As these companies compete for dominance in AI supercomputing, each system presents unique advantages and challenges. Huawei’s approach emphasizes sheer computational power and extensive bandwidth, but its proprietary design could pose adoption barriers. Nvidia’s DGX SuperPOD, though smaller in scale, offers a well-tested platform that enterprises can implement immediately. AMD, positioning itself as a future disruptor, is generating anticipation with its innovative architecture but has yet to deliver concrete performance metrics.

As the landscape of AI supercomputing evolves, the race among these three giants will likely shape the future of high-performance computing across various sectors. The competition is not just about numbers; it is about how these systems will meet the growing demands of AI research and application in the coming years.