On October 23, 2025, the Whiting School of Engineering’s Department of Computer Science welcomed Aaron Roth, a professor of computer and cognitive science from the University of Pennsylvania, to deliver a talk titled “Agreement and Alignment for Human-AI Collaboration.” The presentation highlighted findings from three significant papers focused on improving the interactions between humans and artificial intelligence in decision-making processes.

As artificial intelligence continues to permeate various sectors, researchers aim to clarify how AI can enhance human decision-making. Roth illustrated this point by discussing AI’s potential role in assisting medical professionals with patient diagnoses. In this scenario, AI would analyze diverse factors, including historical diagnoses, blood types, and symptoms, to formulate predictions. The human doctor would then review these predictions, using their own expertise to agree or disagree based on the individual patient’s circumstances.

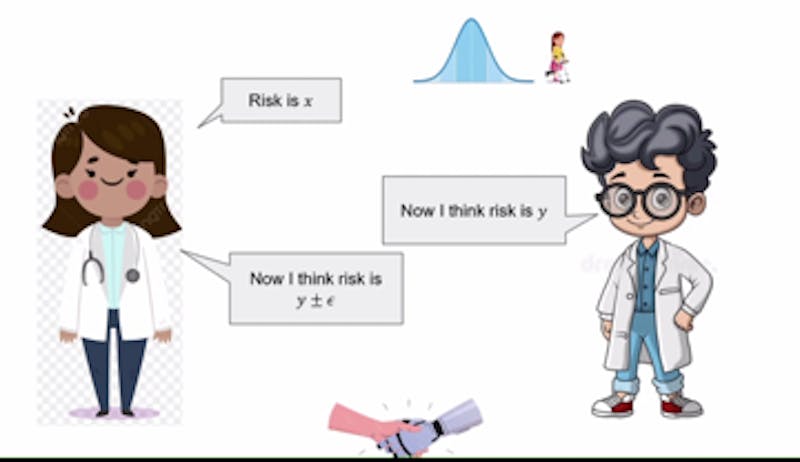

Roth introduced a crucial concept: when disagreements arise between the AI model and the doctor, both parties can engage in a series of discussions to integrate their perspectives. This iterative process relies on a shared understanding, referred to as a common prior, where both the AI and the doctor start with similar foundational assumptions about reality, even if they possess different sets of information. This collaborative approach is characterized by what Roth calls Perfect Bayesian Rationality, wherein each party is aware of the other’s knowledge limitations and works towards a consensus.

While the theory is compelling, Roth acknowledged practical challenges. Establishing a common prior can be difficult, given the complexity of real-world scenarios. When multidimensional topics, such as hospital diagnostic codes, are involved, achieving agreement between human and AI can become increasingly difficult.

To address these challenges, Roth proposed the concept of calibration as a tool for facilitating agreements. He likened calibration to a test for accuracy, akin to assessing the reliability of a weather forecast. “You can sort of design tests such that they would pass those tests if they were forecasting true probabilities,” Roth explained. This concept extends to the interaction between doctors and AI, where claims made by one party can influence the subsequent assertions of the other. For instance, if an AI estimates a 40% risk associated with a treatment, and the doctor assesses it as 35%, the AI’s next claim would logically fall between these two figures. This iterative process fosters quicker agreements.

Roth also addressed potential conflicts of interest in AI models. For example, if an AI is developed by a pharmaceutical company, it may inadvertently prioritize its own products over others. To mitigate this risk, he suggested that doctors consult multiple large language models (LLMs) from different drug companies. By comparing insights from various models, physicians can choose the most appropriate treatment options while fostering competition among providers, ultimately leading to improved model alignment and reduced bias.

The discussion culminated with Roth’s exploration of real probabilities, which represent the true underlying dynamics of the world. While these probabilities offer the highest accuracy, they are often not essential for practical applications. Instead, in many cases, it suffices to derive unbiased probabilities from available data without needing to navigate the complexities of perfect reasoning. This pragmatic approach enables doctors and AI to collaborate effectively, reaching informed agreements regarding treatments, diagnoses, and other critical healthcare decisions.

Roth’s insights provide a framework for enhancing the synergy between human expertise and artificial intelligence, paving the way for more informed and accurate decision-making in the medical field and beyond.