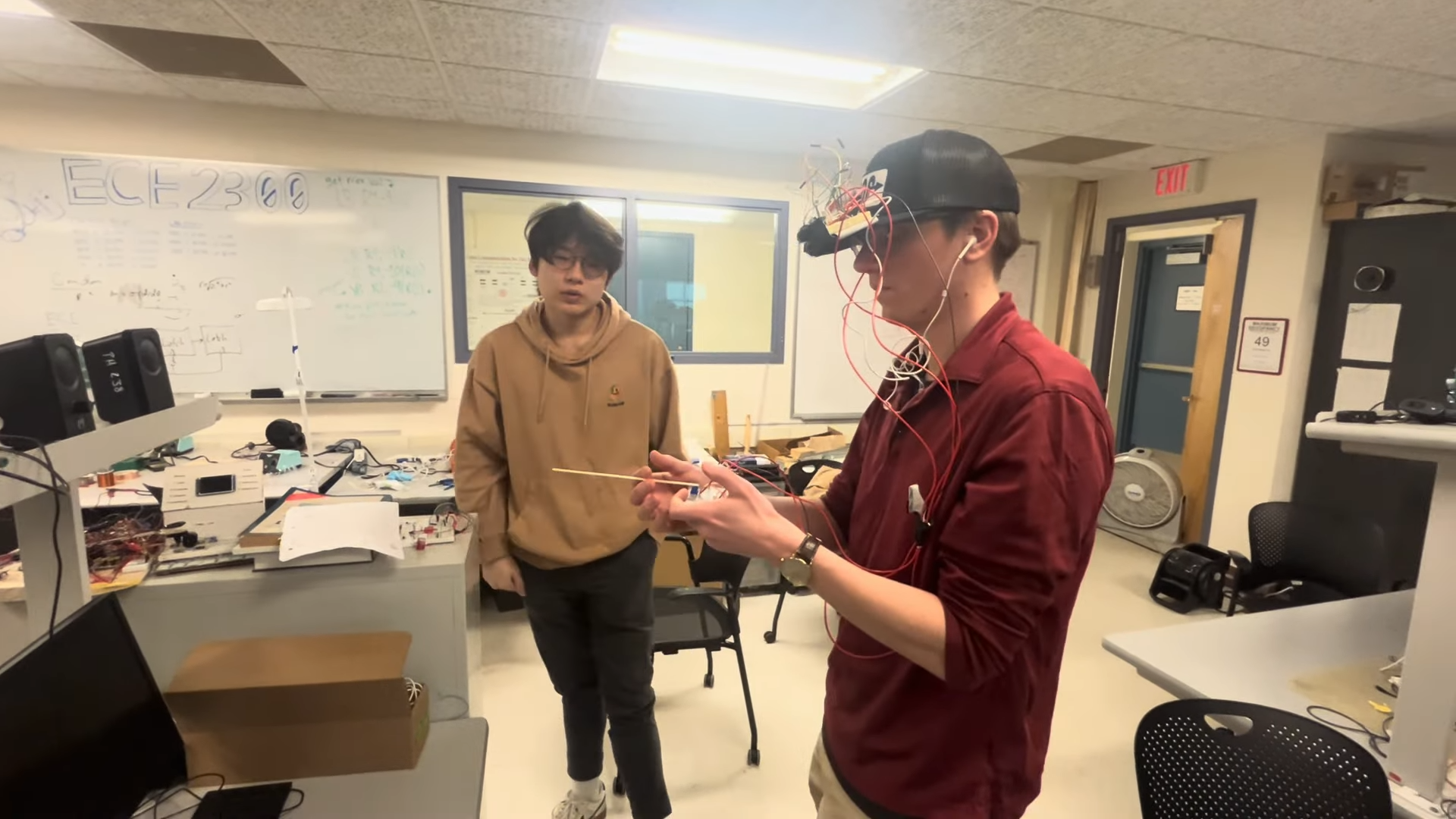

Students at Cornell University have developed an innovative spatial audio system integrated into a hat, enhancing auditory perception for the wearer. This project, undertaken by students from the ECE4760 program, specifically Anishka Raina, Arnav Shah, and Yoon Kang, aims to provide real-time audio feedback about nearby objects, allowing the user to better understand their surroundings.

At the core of this project is the Raspberry Pi Pico, a compact microcontroller that processes data from a TF-Luna LiDAR sensor. This sensor measures the distance to objects in the vicinity, creating a three-dimensional awareness that enhances the spatial audio experience. Mounted on the hat, the sensor can be manually panned from side to side, enabling the wearer to scan their environment for potential obstacles.

While the project does not incorporate head tracking, the students have implemented a potentiometer that allows users to indicate their facing direction. This input helps the microcontroller determine how to interpret the LiDAR data. Once processed, the Raspberry Pi Pico generates a stereo audio signal that conveys the distance and direction of nearby objects using a technique known as interaural time difference (ITD). This method utilizes slight variations in sound arrival times at each ear to create a realistic spatial audio experience.

The project represents a significant step in sensory augmentation, offering a novel approach to enhancing human auditory perception. By translating spatial data into sound cues, the hat not only assists in obstacle detection but also provides a unique interface for individuals seeking improved navigational awareness.

This innovative design builds on similar projects previously highlighted, showcasing the potential of merging technology with everyday wearables. As the demand for assistive technologies grows, initiatives like this from Cornell’s engineering students highlight the importance of interdisciplinary approaches to problem-solving in modern society.