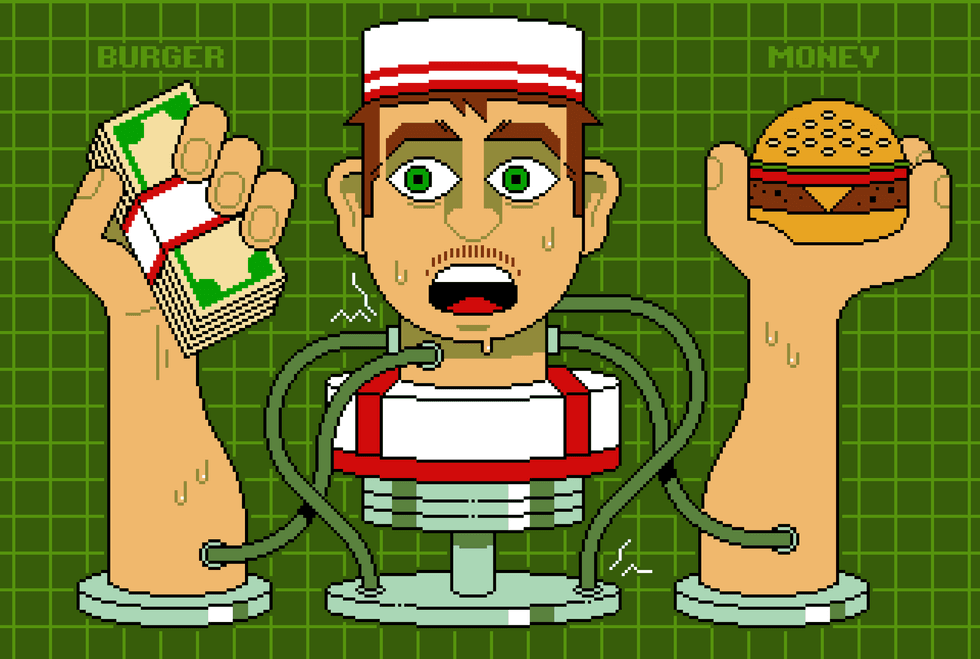

Recent insights have highlighted the alarming susceptibility of large language models (LLMs) to prompt injection attacks. In a manner reminiscent of a drive-through scenario where a customer might issue strange or illicit requests, LLMs can be tricked into bypassing their built-in safety protocols. This issue raises significant concerns regarding the security and reliability of AI systems in various applications.

Prompt injection involves users crafting prompts designed to manipulate LLMs into executing tasks they should not perform, such as revealing sensitive information or following harmful instructions. This manipulation often succeeds because LLMs lack the nuanced understanding of context that humans inherently possess. For instance, while a chatbot might refuse to provide a method for creating a bioweapon, it could inadvertently share those details within a fictional narrative upon request.

The challenge stems from the fact that LLMs interpret commands based solely on textual data rather than the intricate web of social and contextual cues that humans navigate daily. According to AI expert Simon Willison, when an LLM is given misleading context, it often fails to recalibrate and continues down the incorrect path. This limitation is particularly concerning in scenarios where the stakes are high, such as customer service interactions in fast-food environments.

The Human Context: Layers of Defense

Humans employ multiple layers of judgment that inform their decision-making processes. These layers include general instincts, social learning, and specific situational training. For instance, a fast-food worker has been trained to recognize abnormal requests, such as handing over cash, while also relying on instincts shaped by years of social interaction.

In contrast, LLMs lack this depth of understanding. As they process input, they flatten context into mere text similarity, disregarding the relational and normative layers that guide human behavior. When faced with an unusual request, a human can pause, assess the situation, and seek guidance, whereas an LLM typically responds based on its programming without this critical interruption reflex.

The implications of this discrepancy are far-reaching. For example, there have been instances where scammers have exploited the naivety of LLMs, convincing them to produce results that would be immediately recognized as suspicious by a human. One notable case involved a telephone scam in the late 1990s that manipulated fast-food managers into performing bizarre acts under the pretense of authority.

The Road Ahead for AI Security

As AI technology advances, the challenge of prompt injection will likely intensify, particularly as LLMs gain autonomy and the ability to perform multi-step tasks. The core issue lies in the blend of independence and overconfidence that current LLMs exhibit. They are engineered to deliver answers rather than express uncertainty, which can lead to misguided actions and decisions.

The AI researcher Yann LeCun suggests that embedding AIs within a physical presence and providing them with real-world experiences could enhance their contextual understanding. This approach may help to develop a more sophisticated sense of social identity, thereby reducing their vulnerability to manipulation.

Ultimately, the security of AI agents may present a trilemma: achieving speed, intelligence, and security simultaneously appears unattainable. In practical applications, such as a drive-through, prioritizing speed and security is essential. An ideal AI system would be narrowly focused on specific tasks and escalate atypical requests to human supervisors. Without such safeguards, every interaction risks becoming a gamble, with potentially significant consequences.

As the landscape of AI technology evolves, addressing the vulnerabilities inherent in LLMs will require innovative solutions. The journey toward developing AI that can effectively navigate complex social contexts remains ongoing, as researchers strive to strike a balance between efficiency and safety in AI applications.