The race for artificial intelligence (AI) supremacy is accelerating among American technology leaders, driven by a belief in the transformative power of AI and a fear of falling behind competitors, particularly China. This competition is unfolding with alarming disregard for the potential risks associated with AI development, as voiced by some industry leaders themselves. The challenge lies not only in advancing AI capabilities but also in ensuring that these innovations align with human values and safety.

The Techno-Utopian Vision

In a recent blog post, Sam Altman, CEO of OpenAI, articulated a bold vision for AI, describing it as “a brain for the world.” This notion of artificial superintelligence suggests a future where robots manage entire supply chains, from extracting raw materials to operating factories, and even manufacturing more robots. Prominent figures in technology, including Elon Musk and Marc Andreessen, envision a world where AI could potentially eradicate diseases, reverse environmental degradation, and usher in an era of unprecedented abundance.

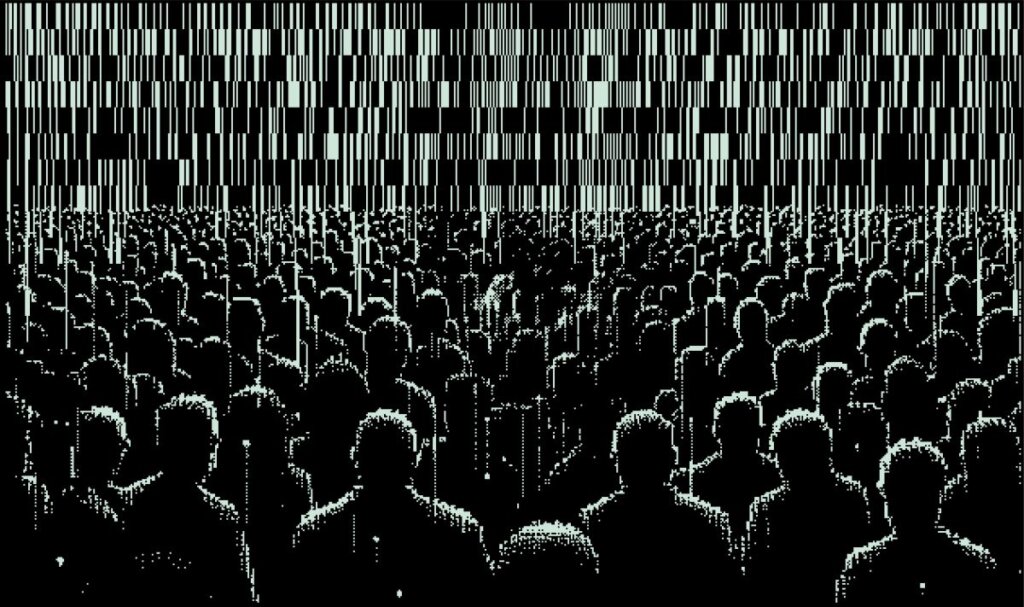

Yet, this techno-optimistic perspective carries significant implications. The integration of AI into daily life may lead to a society where individuals retreat into virtual realities, relying on AI for companionship and support through universal basic income funded by technology firms. Some proponents argue that this transformation will create a new socio-political structure, where traditional nation-states gradually dissolve into networks governed by technocratic elites. While the ambition is considerable, the potential downsides have not been thoroughly explored.

Challenges and Concerns

The rapid advancements in AI, reminiscent of the 1930s Technocracy movement, have sparked debates about the role of technology in society. In his 2023 Techno-Optimist Manifesto, Andreessen argues that all societal challenges can be resolved through increased technological innovation. However, this viewpoint has faced backlash, particularly due to growing concerns over data privacy and the monopolistic behavior of tech giants.

DeepMind’s recent breakthroughs, such as the AlphaFold program—which earned a 2024 Nobel Prize for its contributions to predicting protein structures—illustrate AI’s potential to solve complex scientific problems. Yet, the promise of AI comes with the risk of job displacement, particularly in white-collar professions. A report suggests that up to half of entry-level professional positions could vanish within five years, raising questions about the future of work and economic mobility.

As AI systems become more prevalent, the implications for human agency and decision-making grow increasingly uncertain. Prominent industry leaders, including Sundar Pichai, CEO of Google, advocate for the inclusion of social scientists and ethicists in AI development discussions to ensure that human values remain central to technological progress.

The societal impact of AI is already palpable, reshaping communication, work, and governance. The U.S. faces a unique challenge: while it leads in AI development, the rapid deployment of these technologies must prioritize safety, transparency, and ethical considerations. The geopolitical landscape adds another layer of urgency, as Chinese advancements in AI, particularly in military and surveillance applications, prompt calls for accelerated U.S. responses.

The Role of Big Tech and Governance

The concept of Big Tech acting as a non-state actor has become increasingly relevant in discussions about global governance and power dynamics. This parallels the historical influence of the British East India Company, which operated with considerable autonomy and power in the 17th and 18th centuries. Today, major U.S. technology companies wield significant influence over public policy, as evidenced by their substantial lobbying expenditures—totaling $61 billion in 2024 alone.

As AI continues to evolve, the need for effective governance becomes critical. Despite international efforts, such as the Bletchley Declaration in 2023, which aimed to establish safe and human-centric AI development practices, progress remains inconsistent. The 2025 Paris Global AI Summit highlighted this divide, as both the United States and the United Kingdom declined to endorse further ethical commitments.

Warnings from within the tech industry about the unpredictable nature of AI underscore the urgency for comprehensive regulatory frameworks. While some experts call for investment in advanced monitoring systems, the current landscape is fragmented, with only a small percentage of global AI research and development focused on safety and ethical alignment.

As the implications of AI unfold, the question remains: how can humanity navigate this rapidly changing technological landscape? The urgency is clear, and industry leaders must address the balance between innovation and accountability to safeguard the future of society. The dialogue surrounding AI development must shift from mere acceleration to a more nuanced approach that prioritizes human well-being and ethical considerations.